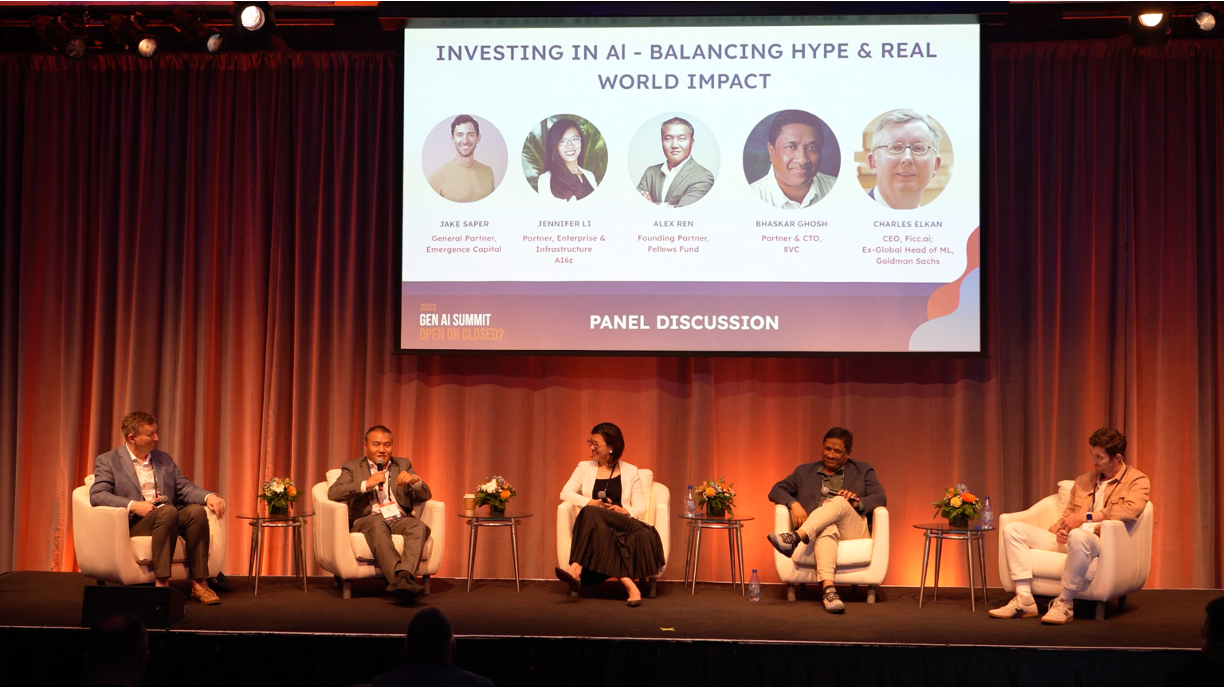

Panel 4: Investing in AI - Balancing Hype and Real-World Impact

Gen AI Summit 2023: Open or Closed?

Speakers:

Emergence Capital: Jake Saper, General Partner

A16z: Jennifer Li, Partner

Fellows Fund: Alex Ren, Founding Partner

8VC: Bhaskar Ghosh, Partner & CTO

Ficc.ai: Charles Elkan, CEO; Ex-Global Head of ML, Goldman Sachs; Professor of CS, UCSD (moderator)

Summary:

During a panel discussion on AI investment strategies, the participants provided insights into various aspects of the field. Jake Saper from Emergence Capital emphasized the importance of identifying the job to be done and building broader workflow elements to create defensible applications. Bhaskar Ghosh from 8VC highlighted opportunities in AI infrastructure, data modes, and generative AI applications. Jennifer Li from Andreessen Horowitz discussed early-stage investments in B2B enterprise and infrastructure, focusing on foundational technologies that enable intelligent workflows. Alex Ren from Fellows Fund emphasized the value of domain expertise, understanding the problem, and building products with market fit.

Regarding data strategies for AI startups, the panelists discussed the significance of proprietary data, the potential to build data through workflow products, and the importance of purposefully selecting data to drive value. In terms of governance and regulation, the panel agreed that compliance measures are crucial for driving the adoption and sale of AI solutions. They highlighted the need for proper guardrails, data privacy, and compliance in both the B2B and B2C sectors. On the topic of content generation and white-collar work, the panelists argued that generative AI can improve productivity by enabling individuals to accomplish tasks that were previously challenging or inaccessible. They discussed the exponential growth of human creativity and the benefits of higher-level tools in enhancing capabilities.

Regarding the profile of successful AI startup founders, the panelists stressed the importance of domain expertise, understanding pain points, and having a product mindset. They highlighted the value of technical talent, AI expertise, and the ability to reimagine user experiences. Additionally, personal experience of the problem and a fresh perspective was considered advantageous for developing innovative solutions.

Overall, the panel provided valuable insights into AI investment strategies, data strategies, governance and regulation, the application of generative AI in physical areas, and the profile of successful AI startup founders.

Video:

Full Transcripts:

Charles Elkan:

Well, it's a pleasure to be here. I'm really grateful to Alex Ren and Fellows Fund for organizing this, as well as Zoom and HubSpot for their coordination. Also, I believe we have an exciting panel now with some great panelists. We have four partners from four very different but all important venture capital funds here in the Valley. I'll introduce them briefly and let them provide a more detailed introduction if they wish. Then we have some questions to dive into. Hopefully, the conversation will explore interesting and useful directions for the audience. Let me start with the person furthest from me. On my furthest left, we have Jake Saper. Jake is a general partner at Emergence Capital and a very experienced investor. Then we have Bhaskar Ghosh, also known as BG. BG is the general partner at 8VC. And in the middle, we have Jennifer Li, a partner at Andreessen Horowitz, who needs no introduction. Finally, to my immediate left is Alex, who has had a fascinating career in the technology industry. Most recently, he is the creator and founding partner of Fellows Fund, which is bringing a new model to venture to invest in technology. We'll jump right in with our first question, which is for each of the great investors here: What is the primary focus of your AI investment strategy? Maybe we'll start in the order we had the introductions, starting with Jake and then moving towards me.

Jake Saper:

Sure. Thank you, Charles. And thank you, Alex, for organizing this event. I would also like to thank Zoom and HubSpot for co-hosting. It's a special conference for me personally, as my wife got her start in her first career at HubSpot. She was a sales rep there, and now she herself is a VC after many years. Additionally, Zoom was the very first investment that I led diligence on when I joined Emergence nine years ago. We feel very fortunate to be involved with both companies. At Emergence, our focus is solely on B2B software investing. That's what we've always done and what we will continue to do. We invest in companies like Zoom. Regarding AI, we are exploring ways in which AI can augment workers in the future. For example, how can we integrate AI into applications like what Zoom is doing with their Sales IQ product? This involves using AI to coach workers in real-time using data, helping them perform their jobs more effectively.

Bhaskar Ghosh:

Thank you, Charles, and thank you, Alex, for inviting me. I would also like to express my gratitude to Zoom, HubSpot, and everyone involved in organizing this event. Being here brings back memories of my past experiences organizing talks during my time at LinkedIn and Yahoo. As a partner at 8VC, we primarily invest in enterprise software with a strong focus on AI, data, and automation. AI pervades almost everything we do, and we have made numerous vertical SaaS investments in various fields, ranging from Bio, and IT to logistics. AI serves as automation mechanism or enables the use of data, leading to data modes. Personally, I focus more on the infrastructure and platforms related to data, AI, and the cloud. We are interested in AI infrastructure, middleware, and the overall data story, including the plumbing around AI. With the rise of generative AI in the mainstream, we are also exploring departmental applications, both vertical and horizontal, that can benefit greatly from foundation models and generative AI. These are some of the areas we focus on.

Jennifer Li:

Great, I'll keep my part brief. Before joining Andreessen Horowitz as an investor, I worked on the product side, building developer tools at AppDynamics. I had the opportunity to use some of the earlier models to detect anomalies and worked on building conversational AI chatbots in 2017-2018, not fully realizing the transformative impact of transformers at that time. But here we are now. Since joining Andreessen, my focus has been on early-stage B2B enterprise and infrastructure investments, which form the foundation for developers and technical audiences to build upon and create application-layer experiences that enable more intelligent workflows. This includes areas such as vector databases, foundation models, and applications that can significantly enhance productivity. As a firm, we have a broad approach, covering areas from biotech to gaming to consumer experiences. We are truly excited about the future in this space.

Alex Ren:

Thank you, Charles. My name is Alex Ren, and I'm a founding partner of Fellows Fund. Our fund is unique as it consists of 25 fellows who are leaders in this space, representing different organizations such as Google, Facebook, and other top tech companies. Charles is one of our distinguished fellows. We also have many fellows in the audience, so please stand up. Among them, we have Jeff, who is a CISO at Chime, and Stef is a VP of Roblox. We also have JC Mao, and Li Deng, Chief AI Officer at Vatic and Former Chief AI Officer at Citadel. We have numerous fellows deeply involved in AI applications and infrastructure. Regarding applications, we search for the best AI companies in various sectors, including accounting, insurance, healthcare, pharmaceuticals, and enterprise. We aim to find the best AI company in each sector. Recently, we have been investing significant time in this area. That's my introduction.

Charles Elkan:

Thank you, Alex, and everyone. Now that we know about people's backgrounds and investment interests, we can move on to the first question we have prepared for this panel. Nowadays, many companies are building APIs to make generative AI available, and then other companies are building applications on top of these APIs. For both investors and founders, the natural question is, what are the potential moats when building an application on top of APIs? And conversely, when building APIs and facing competition, what are the moats for your API?

Jake Saper:

I'm happy to start with this one. When it comes to application layer software investing, now that everyone has access to great technologies like OpenAI's GPT, Co-Pilot, and other foundational models, the question becomes, what aspects of your application are defensible and durable? At Emergence, we approach this by using Clay Christensen's job-to-be-done framework. We start by identifying the specific job that the technology is intended to perform. Then, we zoom out and ask ourselves if the majority of that job can be accomplished by directly accessing the large language model (LLM) or other similar services. If the answer is yes, and most of the job can be done by going straight to Chat GPT or other services, it's unlikely that what you're building will be defensible and durable. However, the reality is that many jobs within the enterprise require much more than just the model itself. We need to consider the broader SaaS suite that is necessary to fulfill the job-to-be-done. Sometimes, amidst the hype surrounding generative AI, we tend to overlook these essential components. For example, if you're working on legal contracting and utilizing AI to assist in editing contracts, that's valuable. But to fully accomplish the job-to-be-done for legal contracts, you need to build additional features. These may include text editing capabilities, permission management, collaboration functionality, approval processes, and even a signature tool. It's the combination of these broader workflow elements that we find truly exciting and critical for building defensible applications. There are no easy shortcuts when it comes to building great software. Once you have the workflow in place and people are actively using the software, the next step is to gather business outcomes data from that workflow. Many have discussed the importance of proprietary data in the context of generative AI. At Emergence, we believe that the most valuable proprietary data in generative AI will be the business outcomes data. For example, in the case of legal contracts, it's not necessarily the specific contracting data that's proprietary, but rather the insights gained from analyzing the outcomes of using the AI-enabled workflow. By leveraging this business outcomes data, companies can create a closed loop to improve their models and make better recommendations in the future. This approach contributes to long-term defensibility.

Jennifer Li:

I very much agree with Jake's framework. From an infrastructure point of view, the infrastructure on which applications are built has never been as much of a differentiation for the application itself. It doesn't matter which database you're using under the hood as long as it provides a good user experience. What matters is meeting user demands, and having the integrations and features that users expect to complete their tasks or workflows. This is where the application's value lies and where improvements should be focused. The barriers to building products on top of open APIs are lower now, thanks to hackathons and API models, which is an exciting development. This enables faster and more innovative improvements in the user experience and the creation of new experiences that were previously unimaginable in both B2B and B2C applications. For example, conversational AI, voice cloning, and image generation models can be applied to enhance the day-to-day activities of knowledge workers.

Bhaskar Ghosh:

I would like to add something. Jennifer and Jake are doing an amazing job in the application and workflow tier. It is clear that large enterprises with significant customer data, including text and unstructured data, will benefit greatly. They can perform tasks like extraction and Q&A more effectively. However, it remains unclear what startups can do in this area. Recently, I spoke with people from ServiceNow, Zendesk, and Salesforce, and observed that their focus is on the source of truth and workflow capabilities. The workflow and no-code/low-code aspects of their products are extremely important. With the help of LLMs, it's possible to generate these workflows more easily and incorporate some level of human involvement. So, it seems that large companies will benefit the most in the short term. The question is whether startups can build the necessary infrastructure to support these workflows. At 8VC, we are particularly interested in the application tier and marketing, but we're not sure if there are many hot companies in those areas. There are other areas we are exploring, such as internet orchestration, the development of new databases, data ingestion and formatting adapters, prompt engineering, and addressing regulatory and privacy concerns. These areas offer rich opportunities for startups and investments, and at 8VC, we are highly interested in exploring them.

Alex Ren:

I agree with all of you. The decision to focus on infrastructure or applications when building a company depends on various factors. The definition of 'moat' can be confusing because from a technical perspective, if you use APIs, there are no barriers. However, when considering founders and building companies, the best builders are those who can achieve product-market fit quickly. So, it's essential to have domain knowledge, understand the product and the problem, and build the product with traction and paid customers. You don't need to train models from day one; you can leverage APIs. This suggestion applies to founders building AI application companies. On the other hand, if you are an infrastructure company, you need to focus on data and technology, which presents a different situation. So, it's like an A/B test for doing things. By using APIs and incorporating AI into the product, you can deliver value to customers with fewer hires and build a strong moat in the application tier.

Charles Elkan:

Thank you. I think all of you have already touched on our next question in some way. So, your answers can be brief or expanded further if you have more to add. The question is: What is an effective data strategy for AI startups? You have all emphasized the importance of data, and while large organizations often have proprietary data, most startups lack such data. So, what would be an effective data strategy for AI startups?

Jake Saper:

Well, you can start with a workflow. By having a workflow product, you can create proprietary data. You don't necessarily need to start with it. There's a cold start problem for anyone who doesn't have data. You can overcome this by building a workflow product, gathering data, and tying it to outcomes. I've seen creative business development (BD) deals happening where startups approach legacy companies to negotiate data access. These deals could involve investments, revenue sharing, or IP sharing, although the latter can be risky. There are various ways for startups to obtain proprietary data. However, I believe there is an overemphasis on this aspect in the early stages, which, in my opinion, is a mistake. If you were to ask me, in the spirit of co-moderating, one thing I've changed my mind on regarding AI today compared to four years ago is the perceived importance of proprietary data upfront. I used to believe that building a generalist model accurate enough to solve specific problems was not possible. For example, if you had told me four years ago that a generalist language model (LM) could pass the bar exam or obtain a medical license, I wouldn't have believed it, as I thought more domain-specific models were required. However, the reality is that many generalist models are now highly capable, and you don't necessarily need an extensive amount of proprietary data to get started. You can begin with a general model, develop a workflow tool to gather more specific data, and gradually build your own model using proprietary data over time. It's also possible to fine-tune the existing model. Compared to four years ago, there are more options available to startups.

Jennifer Li:

It's a very interesting comparison to four years ago. I remember when I was at a startup, the pitch was always about having proprietary customer service data to train better models than others, particularly in the generative sense. However, the barriers are now much lower. You don't have to start by gathering a large amount of tickets or call notes. The capabilities of generative models are quite impressive. The key is to determine where to target them and how to fine-tune or prompt-tune the model itself to serve specific purposes. Let me provide a couple of examples that impressed me. For instance, there are many designer tools attempting to compete with Adobe by enabling the generation of graphics and photos. However, there is one company solely focused on texture generation, as it is a critical component in game design and various products. They have been able to fine-tune their own model by gathering purposeful and unique data, allowing them to excel in generating a wide range of textures. They can even enlarge textures to infinite sizes to fill screens, billboards, slide decks, and more. This approach reflects the current data strategy being implemented by startups, where the emphasis is not on gathering an enormous amount of proprietary data, but rather on purposefully selecting data that contributes to the flywheel effect and drives value. That's my perspective.

Bhaskar Ghosh:

I'm not an expert on the subject, so I won't say much. Startups trying to build amazingly deep new models probably face a challenging business model as they would need significant upfront investment. I agree with Jennifer and Jake. What surprises me in the realm of machine learning and data is how good the existing models are and how little incremental value additional data might provide. My mind often goes back to the concept of plumbing. If you're working with customers' first-party data, what problems can you solve that they can't? Ultimately, you'll be leveraging the customers' data, but the question is how you can add a high-value delta to their product management. I haven't made up my mind about what will happen, but I keep thinking about how the human-in-the-loop concept will work in that context and how data cleansing can be achieved. So, it's not just about obtaining extra data but also about what you can do with it and whether there is sufficient plumbing infrastructure in place to enable quick actions. It might be a boring answer, but that's my perspective.

Charles Elkan:

Thank you for the answers. The theme of this panel is investing in AI, so let's move on to another question related to the investment environment. Alex mentioned the discussions around regulation during his recent trip to Germany, and as someone in the financial industry based in New York, I see that regulation, governance, and compliance are top concerns when it comes to new technologies. So, my question for the panel is: Will governance and regulation be opportunities or obstacles for AI startups, especially in terms of privacy and other related areas? How do you view compliance, regulation, and governance?

Jake Saper:

I'm happy to start. I see governance and regulation as a positive development. As we recently witnessed, Sam Altman asking to be regulated on Capitol Hill, it was a significant moment in the history of tech. But the importance of regulation goes beyond just the betterment of humanity, although that's the most important aspect. It's also crucial for the successful adoption and sale of AI solutions. Without proper guardrails and compliance measures, we are at risk of experiencing a major incident similar to the FTX situation, where an enterprise used a model without adequate oversight and a significant problem occurred, such as data leakage or unauthorized financial transactions. Such incidents can shake enterprises' confidence and make them reluctant to purchase AI solutions. We've already seen the Samsung case where confidential notes were shared inappropriately, causing concerns for many enterprises. Therefore, I believe compliance and regulation will play a role in driving adoption, and it won't necessarily be driven solely by the government. Businesses will demand compliance before purchasing AI solutions.

Bhaskar Ghosh:

I would like to add to that. There are complex questions about the impact of automation on jobs and society that we won't delve into in this panel. However, within the context of compliance and governance in the B2B sector, privacy is just one aspect. We can consider it as industrial privacy. For instance, if you're building a product internally and using APIs, it's vital to have guardrails to ensure the data being transmitted to external systems or different VPCs is properly controlled. Similarly, if you're building a model and ingesting third-party and open data, it's crucial to ensure you're not infringing copyright and have mechanisms in place to roll back the model if any issues arise. These are challenging problems, both from a process and technical perspective. Another consideration is when you want to build a data-as-a-service business, which is why Snowflake Marketplace is thriving. As a data-as-a-service business, you need to ensure legal compliance when selling data blended from first, second, and third-party sources. It's important to address concerns related to programming websites and other issues that might arise. Compliance and regulation in the B2B context are already significant and pose hurdles for large companies. Smaller companies focusing on workflows, NPI (non-public information) handling, and plumbing may find opportunities in articulating B2B problems effectively. This is an area of interest for us at 8VC, and we believe there is potential. Anyway, this is one of my favorite topics, and I'm glad you asked.

Alex Ren:

So I think the issue is really in the long term. I think regulation is good. But in the short term, it's about how to execute it and who should be responsible for it. People need to deeply understand that. So that will be a question. And if an agency issues licenses to startups, it may slow down and hinder innovation. This is something to consider before we reach a stage where AGI becomes a reality. So I believe the execution of regulatory measures is still far away.

Charles Elkan:

Moving on to the theme of investment opportunities and challenges. Something that we often overlook in the tech industry is how much of the economy exists in the physical world. So my question is, where can generative AI provide value in physical areas? I'm thinking of construction, manufacturing, logistics, retail, food service, transport, all these sectors that may not immediately appear tech-focused. What are the opportunities for generative AI?

Alex Ren:

We have been closely following various sectors such as robotics, AR, and real-world applications. What I have learned is that the most significant innovation today lies in natural language models, specifically in language processing and text generation. Looking back at the past 10 years, sectors like self-driving cars and robotics have faced challenges in achieving profitability due to small profit margins. Moreover, applying larger language models to enterprise applications and content generation has proven to be more profitable. This is mainly because training robotics requires valuable data that is often scarce. For instance, the self-driving car industry is still incurring losses rather than generating profits. Therefore, applying larger language models to enterprise applications and content generation seems to be more promising in terms of profitability.

Jennifer Li:

I think this is a topic that has also been widely discussed in the public media, and it's quite interesting. Large language models, or generative AI in general, tend to disrupt white-collar jobs more than jobs in retail or manufacturing, which are often considered blue-collar jobs. That's one observation I would like to make regarding the impact. However, there are still numerous applications that can be developed. For example, document processing for logistics transactions, loan processing, and even in the retail sector, creating personalized product experiences for customers. There are many areas where generative AI can be applied today. Of course, there is ongoing research on applying this intelligence to robotics and developing more human-level capable robots for broader work capabilities. So there are plenty of opportunities to leverage generative AI, but currently, we mostly see its applications in creative work and specific use cases.

Bhaskar Ghosh:

Yeah, I think there's a less exciting part of the answer that we have already discussed, which is that next-gen RPA will likely thrive in traditional areas such as back-office processes and human-in-the-loop process automation, where there is a significant amount of unstructured data and text involved. For example, invoice processing and accounts payable. However, these opportunities are not limited to those areas. We anticipate that supply chain management will also see significant impact. On the other hand, when it comes to the more complex tasks of multimodal model building for audio, video, and image, I don't have much expertise in that area, and I haven't seen it being ready for sophisticated applications yet, but I could be mistaken. At 8VC, we haven't focused on that aspect. So, the less exciting part is that RPA 3.0, which involves automation in traditional industries, presents a ripe opportunity. However, whether there will be successful software model companies in these areas remains to be seen. If we look at how traditional companies like UiPath, Automation Anywhere, Pega, and Blue Prism have performed, developing successful models has been challenging, and customer satisfaction might not be high. Therefore, it requires someone to write high-quality software, regardless of whether generative AI is used or not. Nevertheless, we believe there is a significant opportunity in traditional industries. So, that's the less exciting part of the answer.

Jake Saper:

I can provide two quick specific examples in the field of construction and AI. One company we work with is called DroneDeploy, which sells software that helps farmers, construction workers, and others in the field to fly drones autonomously, gather imagery data, and make decisions based on that data. This includes estimating crop quantities, such as counting the number of corn stalks in a field, and helping construction sites identify discrepancies between planned and actual construction. Over the past six months, they have integrated diffusion models, which have greatly improved their capabilities. Another company is Drishti, which uses computer vision in the manufacturing industry to help workers assemble products more effectively. It's fascinating to witness the impact of these models on their work. So, I do believe there will be real-world impact as the panel's topic suggests.

Charles Elkan:

Thank you, Jake. It's truly inspiring to hear these concrete examples of how new technologies are enhancing productivity in the real world, which benefits society as a whole. One phrase that has been mentioned by other panelists is 'content generation,' and there has been a distinction made between blue-collar and white-collar work. Now, I would like to pose a slightly provocative question. Can we argue that a significant portion of white-collar work is more zero-sum, akin to an arms race, and does not necessarily contribute to overall productivity? To be more specific, let's say you're Home Depot and you send a large volume of advertising emails to your customers. Content generation can improve the quantity and quality of those ads, but is it merely a competition with limited gains? Where can content generation, both in terms of quantity and quality, genuinely increase productivity rather than being zero-sum?

Alex Ren:

I'm not an expert in advertising, but based on my personal experience, let me provide an analogy. Suppose I have a private party or a special event. In the past, I would hire a designer to work on creating a poster for me, which would take a few days. However, as someone who is not skilled in design, I might end up being unhappy with the results. Nowadays, with generative AI, I can create a beautiful poster in just three minutes using a general-purpose model. What I have observed is that generative AI, including the broader field of AI, enables us to work on things that were previously beyond our capabilities. For example, someone who is not an expert in business or investment may now be able to participate and gain insights using AI. From this perspective, productivity increases when we can accomplish tasks that were previously challenging or inaccessible. So, in my understanding, generative AI can enhance productivity in this way.

Jennifer Li:

I'll provide a bit more input. I don't believe it's a zero-sum game. Human creativity and content creation have been on an exponential curve. We now generate more data in a year than we did in the past 50 years if I recall correctly. I completely agree that productivity gains come from our ability to do things we couldn't do before. As an example, with GitHub Copilot, I started coding again and was able to use more AI-enabled design tools to create something that I'm not embarrassed about. These are productivity gains where developers can write code 10 times faster, and people can leverage higher-level tools to enhance their capabilities. This, to me, is the essential innovation happening in the field.

Charles Elkan:

Thank you. Unfortunately, we have only a couple of minutes left. So, for the last question, I will shift gears. In the world of generative AI, what does the founder profile look like? When you talk to potential founders and they pitch their teams, what are you looking for? What sets apart a successful founding team in this domain?

Jake Saper:

It's a great question. One critical trait for founders, not only in this domain but in any context, is speed. The rate of learning and execution is crucial because things are rapidly evolving. Additionally, founders who are solving a problem they personally experienced have an unfair advantage. In a space where countless startups are emerging, anyone can code something up on OpenAI over the weekend. However, if you have personally felt a specific pain point, you likely have a unique perspective that others don't. So, if you build a solution based on that, especially given the current hype in the field, you may have an advantage.

Bhaskar Ghosh:

I would say that if you're building an application company that heavily relies on generative AI, the founding team should have deep knowledge of the domain and workflows. They need to intimately understand the pain points, the personas they are targeting, and why customers would pay for their solution. While an AI co-founder may not always be necessary, someone with AI expertise should be involved. In terms of plumbing and infrastructure, the requirements may differ. For instance, if you're entering the new field of foundation model Ops, the next generation of AI infrastructure lifecycle management, you might need someone with extensive experience in that area from a major company. If you focus on the plumbing and orchestration side, at least one founder should come from a background that is familiar with AI and has knowledge in that domain. So, it really depends on the specific context, but there are different profiles that can be successful.

Jennifer Li:

I largely agree with the emphasis on domain expertise. In our team at Andreessen, we also value the technical talent that has a strong product mindset. It's crucial to consider how to productize a core technology for adoption and application. Another important aspect is understanding how to integrate the solution into existing workflows and migrate users to a completely new experience. However, it's worth noting that generative AI capabilities often require a reimagining of the end-user experience, which may not fit into existing frameworks. So, in some cases, domain knowledge from the past may actually hinder the ability to envision and revolutionize new creations and how they transform user experiences. Thus, we have also seen successful founders coming from diverse backgrounds, such as design and graphic creation, who can think outside the box and reimagine the journey.

Jake Saper:

I think it's essential to clarify that my argument is not about coming from the domain but rather having personally experienced the problem. If you have felt the pain point but were not part of the solution before, you may bring a fresh perspective to developing a new solution.

Alex Ren:

I agree with the other panel speakers. When investing in the best business builders, I want to emphasize the fit between the founders and the users, as well as the market. This is critical. We can find hundreds of thousands of mechanical engineers and AI engineers, but only a few hundred people can truly build great businesses. So, understanding the product definition and go-to-market strategy is crucial. Technical expertise or deep research knowledge may not be as important as knowing the domain, the product, and the market. It is challenging to find individuals who are deeply knowledgeable in both AI and the specific domain, but if needed, we can introduce AI leaders to join the team. The key is how to educate and align them with those who possess profound domain and product expertise. So, I think the most important factor is finding founders with the right market fit.

Call to Action

AI enthusiasts, entrepreneurs, and founders are encouraged to get involved in future discussions by reaching out to Fellows Fund at ai@fellows.fund. Whether you want to attend our conversations or engage in AI startups and investments, don't hesitate to connect with us. We look forward to hearing from you and exploring the exciting world of AI together.